This article is part of #ServerlessSeptember. You'll find other helpful articles, detailed tutorials, and videos in this all-things-Serverless content collection. New articles are published every day — that's right, every day — from community members and cloud advocates in the month of September.

Find out more about how Microsoft Azure enables your Serverless functions at https://docs.microsoft.com/azure/azure-functions/.

Azure Functions recently announced the general availability of their Python language support. We can use Python 3.6 and Python's large ecosystem of packages, such as TensorFlow, to build serverless functions. Today, we'll look at how we can use TensorFlow with Python Azure Functions to perform large-scale machine learning inference.

Overview

A common machine learning task is the classification of images. Image classification is a compute-intensive task that can be slow to execute. And if we need to perform classification on a stream of images, such as those coming from an IoT camera, we would need to provision a lot of infrastructure to get enough computational power to keep up with the volume and velocity of images that are being generated.

Because serverless platforms like Azure Functions automatically scale out with demand, we can use them to perform machine learning inferencing and be confident that they can keep up with high-volume workloads.

Without scaling, this is how slowly our app currently runs:

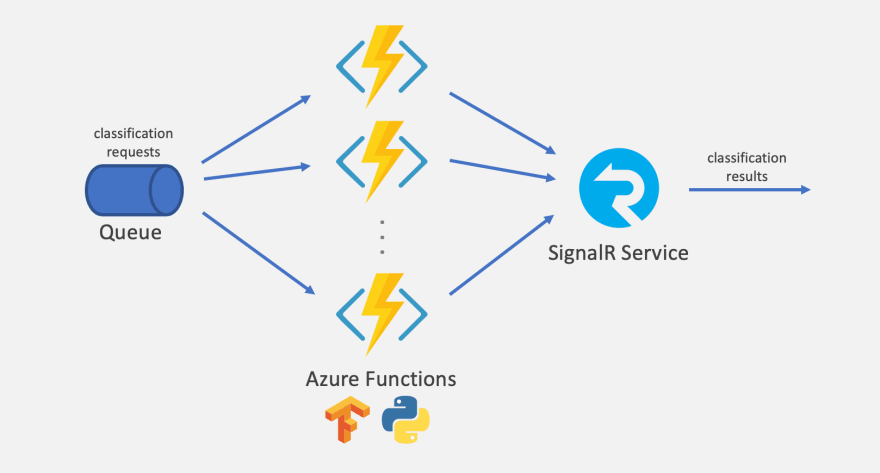

The application is pretty straightforward. Here are the key parts:

- Azure Storage Queue is a low-cost but highly scalable message queue. We're create a message on the queue with the URL for each image that we want to classify.

- Azure Functions is Azure's serverless functions platform. For each image URL in the queue, a Python function will run a TensorFlow model and classify the image. Azure Functions is able to scale out and parallelize this work.

- Azure SignalR Service is a fully managed real-time message service that supports protocols like WebSockets. Each classification result is broadcast to a status page running in one or more browsers using SignalR Service.

Build the app

The app we're building will run an image through a TensorFlow model to predict if it contains a cat or a dog. It consists of the Azure services above. We will first build and run the function app locally.

Create the Azure services

We start by provisioning the following Azure services in the Azure portal.

- Create an Azure Function app with the following parameters:

- Linux consumption plan

- Python

- New Storage account (we'll also create our queue in this account)

- Create an Azure SignalR Service account with the following parameters:

- Same region as your function app

- Free tier

Create the function app

Next, we'll create a Python function app using the Azure Functions CLI. For the complete details, take a look at this Python Azure Functions machine learning tutorial.

func init --worker-runtime python

Next, we'll create a function named classifyimage that triggers whenever there's a message in our queue.

func new --template AzureQueueStorageTrigger --name classifyimage

A function is created in a folder named classifyimage.

To broadcast images using Azure SignalR Service using its Azure Functions bindings, we need to add the extension to our function app. One way to do that is by running this command.

func extensions install -p Microsoft.Azure.WebJobs.Extensions.SignalRService -v 1.0.0

We'll edit our function in a bit. But first, we need to import a TensorFlow model that we'll use to classify the images.

Import a TensorFlow model

For this app, we import a TensorFlow model that we've trained using Azure Custom Vision Service that classifies images as "cat" or "dog". For information on how to train and export your own model to recognize almost anything you can think of, check out these instructions.

The model consists of model.pb and labels.txt. There's a model here that has already been trained. We can copy the files into the folder that contains the classifyimage function.

Use the model in the function

We'll need some packages such as TensorFlow. Add them to requirements.txt and install them:

azure-functions

tensorflow

Pillow

requests

pip install --no-cache-dir -r requirements.txt

classifyimage/function.json describes the trigger, inputs, and outputs of the function. We'll update the queue name to images and add a SignalR Service output binding to the function.

{

"scriptFile": "__init__.py",

"bindings": [

{

"name": "msg",

"type": "queueTrigger",

"direction": "in",

"queueName": "images",

"connection": "AzureWebJobsStorage"

},

{

"type": "signalR",

"direction": "out",

"name": "$return",

"hubName": "imageclassification"

}

]

}

Then we'll update the body of our function (classifyimage/__init__.py) to download the image using the URL from the queue message, run the TensorFlow model, and return the result.

import logging

import azure.functions as func

import json

from .predict import predict_image_from_url

def main(msg: func.QueueMessage) -> str:

image_url = msg.get_body().decode('utf-8')

results = predict_image_from_url(image_url)

logging.info(f"{results['predictedTagName']} {image_url}")

return json.dumps({

'target': 'newResult',

'arguments': [{

'predictedTagName': results['predictedTagName'],

'url': image_url

}]

})

Most of the work is done by the helper functions in predict.py which can be found here and copied into our function app. The function also returns a message that is broadcast over Azure SignalR Service. The message consists of the image URL and the prediction ("cat" or "dog").

Configure and run the app locally

We configure an Azure Functions app locally using local.settings.json. Add the connection strings to our Storage account and SignalR Service.

{

"IsEncrypted": false,

"Values": {

"FUNCTIONS_WORKER_RUNTIME": "python",

"AzureWebJobsStorage": "<STORAGE_CONNECTION_STRING>",

"AzureSignalRConnectionString": "<SIGNALR_CONNECTION_STRING>"

}

}

Now we run the function app.

func start

Using a tool like Azure Storage Explorer, we create a queue named images in our Storage account and add a message containing an image URL. We should see our function execute.

Deploy and run the app at scale

Now that the app runs locally, it's time to deploy it to the cloud!

Run the following Azure Functions Core Tools command to deploy our app to the Azure Function app we created earlier. We're using remote build so that it brings in the proper dependencies required to run on Linux.

func azure functionapp publish <FUNCTION_APP_NAME> -b remote

We also need to configure the Storage account and SignalR Service connection strings in our function app's configuration settings.

To add a lot of image URLs to the queue, run a load generator script like this one.

The sample app also contains a status page that connects to Azure SignalR Service, receives the classification results, and displays them in real-time. To deploy this page to Azure, check out the details on the SignalR Service Serverless Developer Guide.

With the status page opened in the browser, each time a function executes and classifies an image, it'll push the result to the status page. As more and more messages are added to the queue and the Azure Functions platform detects that the queue length is growing, the function app scales out to more and more instances. The number of images classified per second will go up as the function app scales out.

Summary

In this article, we saw that Azure Functions supports Python and its many popular packages, including TensorFlow. It allows us to run machine learning inferencing tasks at a large scale. To learn more, check out these resources:

Top comments (1)

Awesome article, I haven't written any Python in ages so I tricked myself into thinking I knew what the code did 🤣. Seeing the difference between the locally ran app and the scaled cloud app was so satisfying!!

I look forward to more #ServerlessSeptember, maybe I'll give it a crack myself 😁