When we do a live presentation — whether online or in person — there are often folks in the audience who are not comfortable with the language we're speaking or they have difficulty hearing us. Microsoft created Presentation Translator to solve this problem in PowerPoint by sending real-time translated captions to audience members' devices.

In this article, we'll look at how (with not too many lines of code) we can build a similar app that runs in the browser. It will transcribe and translate speech using the browser's microphone and broadcast the results to other browsers in real-time. And because we are using serverless and fully managed services on Azure, it can scale to support thousands of audience members. Best of all, these services all have generous free tiers so we can get started without paying for anything!

Overview

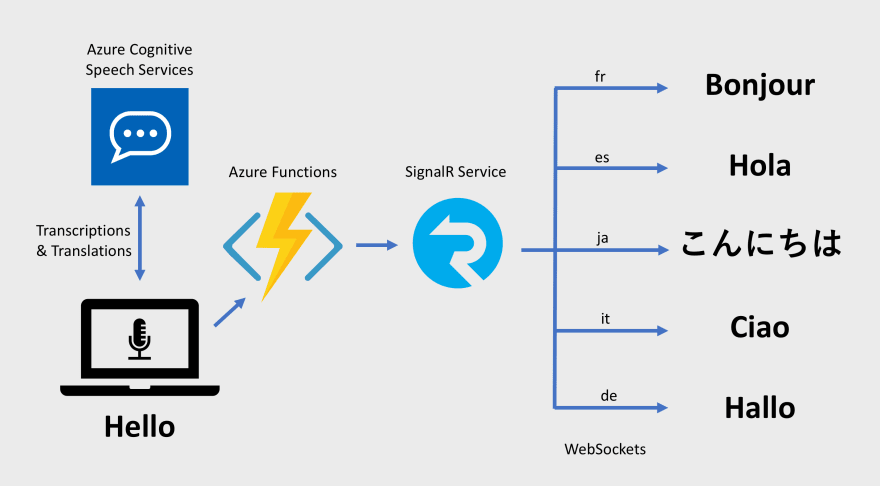

The app consists of two projects:

- A Vue.js app that is our main interface. It uses the Microsoft Azure Cognitive Services Speech SDK to listen to the device's microphone and perform real-time speech-to-text and translations.

- An Azure Functions app providing serverless HTTP APIs that the user interface will call to broadcast translated captions to connected devices using Azure SignalR Service.

Speech SDK for Cognitive Services

Most of the heavy-lifting required to listen to the microphone from the browser and call Cognitive Speech Services to retrieve transcriptions and translations in real-time is done by the service's JavaScript SDK.

The SDK requires a Speech Services key. You can create a free account (up to 5 hours of speech-to-text and translation per month) and view its keys by running the following Azure CLI commands:

az cognitiveservices account create -n $SPEECH_SERVICE_NAME -g $RESOURCE_GROUP_NAME --kind SpeechServices --sku F0 -l westus

az cognitiveservices account keys list -n $SPEECH_SERVICE_NAME -g $RESOURCE_GROUP_NAME

You can also create a free Speech Services account using the Azure portal using this link (select F0 for the free tier).

Azure SignalR Service

Azure SignalR Service is a fully managed real-time messaging platform that supports WebSockets. We'll use it in combination with Azure Functions to broadcast translated captions from the presenter's browser to each audience member's browser. SignalR Service can scale up to support hundreds of thousands of simultaneous connections.

SignalR Service has a free tier. To create an instance and obtain its connection string, use the following Azure CLI commands:

az signalr create -n $SIGNALR_NAME -g $RESOURCE_GROUP_NAME --sku Free_DS2 -l westus

az signalr key list -n $SIGNALR_NAME -g $RESOURCE_GROUP_NAME

You can also use the Azure portal to create one by using this link.

Speech-to-text and translation in the browser

Cognitive Service's Speech SDK is really easy to use. To get started, we'll pull it into our Vue app:

npm install microsoft-cognitiveservices-speech-sdk

Then we just need to initialize and start it:

// listen to the device's microphone

const audioConfig = AudioConfig.fromDefaultMicrophoneInput()

// use the key and region created for the Speech Services account

const speechConfig = SpeechTranslationConfig.fromSubscription(options.key, options.region)

// configure the language to listen for (e.g., 'en-US')

speechConfig.speechRecognitionLanguage = options.fromLanguage

// add one or more languages to translate to

for (const lang of options.toLanguages) {

speechConfig.addTargetLanguage(lang)

}

this._recognizer = new TranslationRecognizer(speechConfig, audioConfig)

// assign callback when text is recognized ('recognizing' is a partial result)

this._recognizer.recognizing = this._recognizer.recognized = recognizerCallback.bind(this)

// start the recognizer

this._recognizer.startContinuousRecognitionAsync()

And that's it! The recognizerCallback method will be invoked whenever text has been recognized. It is passed an event argument with a translations property that contains all the translations we asked for. For example, we can obtain the French translation with e.translations.get('fr').

Broadcast captions to other clients

Now that we can obtain captions and translations thanks to the Cognitive Services Speech SDK, we need to broadcast that information to all viewers who are connected to SignalR Service via WebSocket so that they can display captions in real-time.

First, we'll create an Azure Function that our UI can call whenever new text is recognized. It's a basic HTTP function that uses an Azure SignalR Service output binding to send messages.

The output binding is configured in function.json. It takes a SignalR message object returned by the function and sends it to all clients connected to a SignalR Service hub named captions.

{

"disabled": false,

"bindings": [

{

"authLevel": "anonymous",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": [

"post"

]

},

{

"type": "http",

"direction": "out",

"name": "res"

},

{

"type": "signalR",

"name": "$return",

"hubName": "captions",

"direction": "out"

}

]

}

The function simply takes the incoming payload, which includes translations in all available languages, and relays it to clients using SignalR Service. (Sending every language to every client is quite inefficient; we'll improve on this later with SignalR groups.)

module.exports = async (context, req) => ({

target: 'newCaption',

arguments: [req.body]

});

Back in our Vue app, we bring in the SignalR SDK:

npm install @aspnet/signalr

Note that even though this package is under the @aspnet org on npm, it's the JavaScript client for SignalR. It may move to a different org later to make it easier to find.

When an audience member decides to join the captioning session and our Vue component is mounted, we'll start a connection to SignalR Service.

async mounted() {

this.connection = new signalR.HubConnectionBuilder()

.withUrl(`${constants.apiBaseUrl}/api`)

.build()

this.connection.on('newCaption', onNewCaption.bind(this))

await this.connection.start()

console.log('connection started')

function onNewCaption(caption) {

// add the caption for the selected language to the view model

// Vue updates the screen

}

}

Whenever a newCaption event arrives, the onNewCaption callback function is invoked. We pick out the caption that matches the viewer's selected language and add it to the view model. Vue does the rest and updates the screen with the new caption.

We also add some code to disconnect from SignalR Service when the Vue component is destroyed (e.g, when the user navigates away from the view).

async beforeDestroy() {

if (this.connection) {

await this.connection.stop()

console.log('connection stopped')

}

}

And that's pretty much the whole app! It captures speech from the microphone, translates it to multiple languages, and broadcasts the translations in real-time to thousands of people.

Increase efficiency with SignalR groups

There's a flaw in the app we've built so far: each viewer receives captions in every available language but they only need the one they've selected. Sometimes captions are sent multiple times per second, so sending every language to every client uses a lot of unnecessary bandwidth. We can see this by inspecting the WebSocket traffic:

To solve problems like this, SignalR Service has a concept called "groups". Groups allow the application to place users into arbitrary groups. Instead of broadcasting messages to everyone who is connected, we can target messages to a specific group. In our case, we'll treat each instance of the Vue app as a "user", and we will place each of them into a single group based on their selected language.

Instead of sending a single message containing every language to everyone, we will send smaller, targeted messages that each contains only a single language. Each message is sent to the group of users that have selected to receive captions in that language.

Add a unique client ID

We can generate a unique ID that represents the Vue instance when the app starts up. The first step to using groups is for the app to authenticate to SignalR Service using that identifier as the user ID. We achieve this by modifying our negotiate Azure Function. The SignalR client calls this function to retrieve an access token that it will use to connect to the service. So far, we've been using anonymous tokens.

We'll start by changing the route of the negotiate function to include the user ID. We then use the user ID passed in the route as the user ID in the SignalRConnectionInfo input binding. The binding generates a SignalR Service token that is authenticated to that user.

{

"disabled": false,

"bindings": [

{

"authLevel": "anonymous",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": [

"post"

],

"route": "{userId}/negotiate"

},

{

"type": "http",

"direction": "out",

"name": "res"

},

{

"type": "signalRConnectionInfo",

"direction": "in",

"userId": "{userId}",

"name": "connectionInfo",

"hubName": "captions"

}

]

}

There are no changes required in the actual function itself.

Next, we need to change our Vue app to pass the ID in the route (clientId is the unique ID generated by this instance of our app):

this.connection = new signalR.HubConnectionBuilder()

.withUrl(`${constants.apiBaseUrl}/api/${this.clientId}`)

.build()

The SignalR client will append /negotiate to the end of the URL and call our function with the user ID.

Add the client to a group

Now that each client connects to SignalR Service with a unique user ID, we'll need a way to add a user ID to the group that represents the client's selected language.

We can do this by creating an Azure Function named selectLanguage that our app will call to add itself to a group. Like the function that sends messages to SignalR Service, this function also uses the SignalR output binding. Instead of passing SignalR messages to the output binding, we'll pass group action objects that are used to add and remove users to and from groups.

const constants = require('../common/constants');

module.exports = async function (context, req) {

const { languageCode, userId } = req.body;

const signalRGroupActions =

constants.languageCodes.map(lc => ({

userId: userId,

groupName: lc,

action: (lc === languageCode) ? 'add' : 'remove'

}));

context.bindings.signalRGroupActions = signalRGroupActions;

};

The function is invoked with a languageCode and a userId in the body. We'll output a SignalR group action for each language that our application supports — setting an action of add for the language we have chosen to subscribe to, and remove for all the remaining languages. This ensures that any existing subscriptions are deleted.

Lastly, we need to modify our Vue app to call the selectLanguage function when our component is created. We do this by creating a watch on the language code that will call the function whenever the user updates its value. In addition, we'll set the immediate property of the watch to true so that it will call the function immediately when the watch is initially created.

methods: {

async updateLanguageSubscription(languageCode) {

await axios.post(`${constants.apiBaseUrl}/api/selectlanguage`, {

languageCode,

userId: this.clientId

})

}

},

watch: {

toLanguageCode: {

handler() {

return this.updateLanguageSubscription(this.toLanguageCode)

},

immediate: true

}

},

Send messages to groups

The last thing we have to do is modify our Azure Function that broadcasts the captions to split each message into one message per language and send each to its corresponding group. To send a message to a group of clients instead of broadcasting it to all clients, add a groupName property (set to the language code) to the SignalR message:

module.exports = async function (context, req) {

const captions = req.body;

const languageCaptions = Object.keys(captions.languages).map(captionLanguage => ({

language: captionLanguage,

offset: captions.offset,

text: captions.languages[captionLanguage]

}));

const signalRMessages = languageCaptions.map(lc => ({

target: 'newCaption',

groupName: lc.language,

arguments: [ lc ]

}));

return signalRMessages;

};

Now when we run the app, it still works the same as it did before, but if we inspect the SignalR traffic over the WebSocket connection, each caption only contains a single language.

Next steps

- Check out the source code on GitHub

- Deploy the app — more details in the SignalR Service serverless programming guide

- Explore Azure Speech Services and the SignalR Service bindings for Azure Functions

Thoughts? Questions? Leave a comment below or find me on Twitter.

Top comments (0)