Let’s Stop Talking About Serverless Cold Starts

I was eating dinner the other day with my family. My four year old looked at her plate and said to me “I don’t want this chicken.”

Naturally, I ask her why to which she responds “it has sauce on it.”

See, a few months ago we had a meal with a glaze on it that she….. hated. Ever since then anything that looked like sauce was a no go for her. If it wasn’t a solid, it was sauce and she would reject it.

Super annoying as a parent 😒.

She latched onto an idea, generalized it, and now was too worked up to try anything related.

Sound familiar?

Every presentation I’ve given on serverless someone has brought up cold starts. They heard about them a few years ago and have latched onto the idea that as long as they exist, serverless is a non-starter for them.

So I asked a probing question on Twitter.

Can someone give me an example where #lambda function cold starts gave you legitimate issues in production?

— Allen Helton (@AllenHeltonDev) August 26, 2022

I received a variety of answers, but the most common response I saw was “it’s not a real problem in production”.

There are exceptions of course, but most of the exceptions have valid workarounds. Let’s dive in and see if we can put this argument to bed once and for all.

What Are Cold Starts?

Cold starts occur on the first request to invoke your function or when the Lambda runtime environment is busy processing your business logic.

Lambda must initialize a new container to execute your code and that initialization takes time - hence cold starts.

This means it can happen when users start logging into your application for the day or when a burst of traffic comes through. Traffic bursts refer to points throughout the day with minimal usage followed by a big spike in traffic. This results in cold starts as Lambda begins to scale to match the increased demand.

With this in mind, some applications are more prone to cold starts than others. Applications with a sustained amount of usage throughout the day will see fewer cold starts once Lambda has scaled up to the appropriate amount of concurrent execution environments.

What Causes Cold Starts to Be Slow?

There are a few things that directly impact the duration of a cold start:

- Runtime - The programming language you are using.

- Package Size - The amount of data that needs to be loaded to run your code. This includes layers!

- Startup Logic - The connections and initializations defined outside of the main handler in your function.

Runtime

If Java is your runtime of choice, I have bad news. It is the slowest runtime for cold starts. According to research from Thundra, Java has the slowest cold start duration by 7x compared to the second slowest, .NET.

Compiled languages like Java and .NET have slower cold starts compared to interpreted languages like Python, Go, and Node.

Once function containers have warmed up, performance converges to roughly the same duration between compiled and interpreted languages.

Package Size

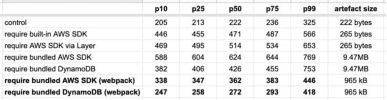

A great case study was performed by Lumigo around performance impact of cold starts as it relates to package size and importing of dependencies. They found that as function package size increases, cold start duration increases, even if additional dependencies aren’t being consumed!

Cold start comparison of package sizes. Source: Lumigo

When you add more imports to a function, the runtime must resolve the dependencies, which adds to the cold start time. So be careful when importing entire packages instead of the specific components you need from it.

If you find yourself importing more and more resources in a single function, it might be time to reassess and evaluate using Step Functions instead to coordinate your workflow.

Startup Logic

It is considered a best practice to define SDK clients and cacheable variables globally in a Lambda function. This allows a function to reuse any connections it has established in subsequent runs.

On the flip side, it adds to your cold start time because they are being setup during the function container initialization phase.

This is one of the accepted trade-offs with serverless and there’s not much you can do about optimizing it. The thought process behind declaring things globally is that the initialization code only needs to be executed once for the entire lifecycle of a function container. If the clients were defined inside the main handler, you’d take the performance hit every time the function was executed, regardless of whether of not the function was warm.

Are Cold Starts Really a Problem?

Back to my original question on Twitter. Are there real production use cases where a function cold start is a problem?

For interpreted languages, your average cold start is going to run you less than 500ms, while compiled languages will run you a bit longer (and around 5 seconds for Java). When considering an additional latency of 500ms, the majority of production use cases are not seriously impacted with cold starts.

You will see latency as your application starts to scale, but is that truly a showstopper?

It might be for some applications that require split-second updates like a stock market exchange app or emergency CAD (Computer-Aided Dispatch) system. But for everything else, that seems like an easy tradeoff when it comes to the scalability, reliability, and availability that Lambda offers.

Much like my four year old and her distaste for “sauce”, people who reject serverless often latch on to the phrase and won’t even try. There’s a sense of fear and ambiguity when we talk about serverless cold starts that result in non-starters for serverless projects.

This has to change.

Whether you reduce your package size, boost the allocated memory, or add provisioned concurrency to your functions, you have options to minimize your exposure to cold starts.

By adhering to serverless best practices, you can easily avoid situations where a synchronous request is waiting on multiple Lambda functions to cold start and return a response. You can even implement caching at every layer of your infrastructure to short circuit lookups.

All this goes to say, if you are worried about cold starts, there are ways to mitigate them. Since cold starts tend to primarily affect synchronous calls, like API requests, you can take a storage-first approach, which takes the burden of waiting for cold starts away from the caller.

Conclusion

Cold starts are very real in the serverless world, but they aren’t a problem in most cases. Stop hiding from something that got way too much hype to begin with. Acknowledge the difficulty in what AWS is abstracting away from us and embrace the minimal latency (comparatively to the problem it is solving).

There’s no reason to be afraid of cold starts. Selecting an interpreted runtime and proper package management will keep your cold starts down to an almost negligible time. If changing programming languages is not an option, which is the case in many companies, consider adding provisioned concurrency to keep your synchronous functions warm at all times.

It’s time we end the “but what about cold starts” comments when talking about serverless. Yes they are real, but are they a real problem? Not anymore.

Happy coding!